Prompt Chaining Pattern: A Powerful Approach to Complex AI Tasks

Chapter 1: Prompt Chaining

Prompt Chaining Pattern Overview

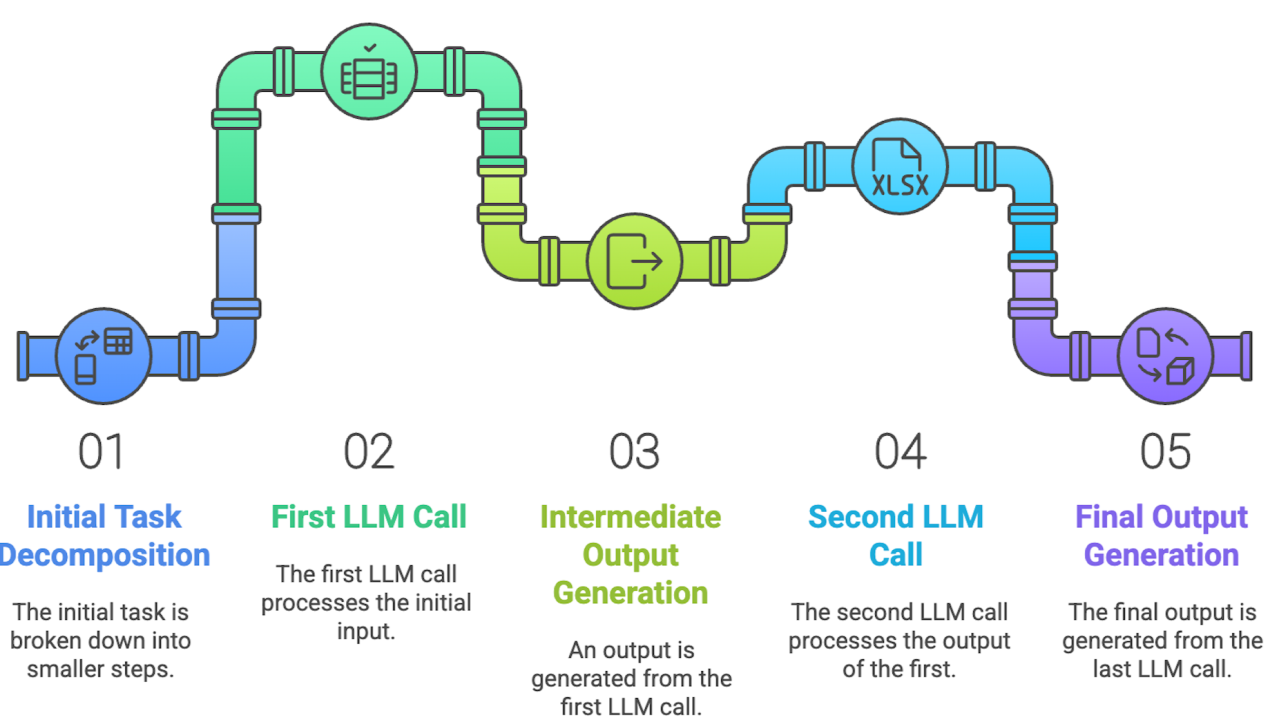

Prompt chaining, sometimes referred to as the Pipeline pattern, represents a powerful paradigm for handling intricate tasks when leveraging large language models (LLMs). Rather than expecting an LLM to solve a complex problem in a single, monolithic step, prompt chaining advocates for a divide-and-conquer strategy.

The core idea is to break down the original, daunting problem into a sequence of smaller, more manageable sub-problems. Each sub-problem is addressed individually through a specifically designed prompt, and the output generated from one prompt is strategically fed as input into the subsequent prompt in the chain.

This sequential processing technique introduces modularity and clarity into the interaction with LLMs:

- Complex tasks are decomposed into smaller steps, making them easier to understand and debug.

- Each step can be optimized independently, focusing on one aspect of the problem.

- Output from one step acts as input for the next, forming a dependency chain.

- External knowledge, APIs, or databases can be integrated between steps.

- Enables building sophisticated AI agents capable of multi-step reasoning, planning, and decision-making.

Limitations of Single Prompts

Using one large prompt for a complex task often leads to issues:

- Instruction neglect: parts of the prompt are overlooked.

- Contextual drift: model loses track of context.

- Error propagation: early errors compound.

- Insufficient context window: not enough space to include all details.

- Hallucination: higher chance of incorrect information.

Example: A query that asks to analyze a market research report, summarize findings, identify trends, extract data points, and draft an email risks failure. The model might summarize well but fail at data extraction or email drafting.

Enhanced Reliability Through Sequential Decomposition

Prompt chaining addresses these challenges by breaking the task into focused steps:

-

Summarization Prompt

"Summarize the key findings of the following market research report: [text]." -

Trend Identification Prompt

"Using the summary, identify the top three emerging trends and extract supporting data points: [output from step 1]." -

Email Composition Prompt

"Draft a concise email to the marketing team that outlines the following trends and supporting data: [output from step 2]."

Benefits:

- Simpler, more controlled steps.

- Reduced cognitive load for the model.

- Clearer modular pipeline.

- Ability to assign roles to prompts (e.g., "Market Analyst", "Trade Analyst", "Writer").

The Role of Structured Output

Reliability of a prompt chain depends on structured outputs. Ambiguity leads to failure in subsequent steps. Use formats like JSON or XML.

Example JSON Output:

{

"trends": [

{

"trend_name": "AI-Powered Personalization",

"supporting_data": "73% of consumers prefer to do business with brands that use personal information."

},

{

"trend_name": "Sustainable and Ethical Brands",

"supporting_data": "Sales of ESG-related products grew 28% over five years, compared to 20% without ESG claims."

}

]

}

Practical Applications & Use Cases

-

Information Processing Workflows

- Extract → Summarize → Entity Recognition → Database Query → Report Generation.

- Used in research assistants and report automation.

-

Complex Query Answering

- Break down sub-questions → Retrieve info → Synthesize results.

- Useful for historical/analytical questions.

-

Data Extraction & Transformation

- Iterative extraction with validation and correction.

- Example: processing invoices, forms, OCR text.

-

Content Generation Workflows

- Ideation → Outline → Drafting → Refinement.

- Used for narratives, documentation, structured content.

-

Conversational Agents with State

- Maintain conversational context through chained prompts.

- Enables multi-turn, context-aware dialogue.

-

Code Generation and Refinement

- Outline → Draft → Debug → Refine → Add tests/docs.

- Supports modular, iterative software development.

-

Multimodal Multi-Step Reasoning

- Example: interpret images with embedded text and labels → map to table → structured interpretation.

Hands-On Code Example (LangChain & LangGraph)

import os

from langchain_openai import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

# Initialize the model

llm = ChatOpenAI(temperature=0)

# --- Prompt 1: Extract Information ---

prompt_extract = ChatPromptTemplate.from_template(

"Extract the technical specifications from the following text:\n\n{text_input}"

)

# --- Prompt 2: Transform to JSON ---

prompt_transform = ChatPromptTemplate.from_template(

"Transform the following specifications into a JSON object with 'cpu', 'memory', and 'storage' as keys:\n\n{specifications}"

)

# --- Build the Chain ---

extraction_chain = prompt_extract | llm | StrOutputParser()

full_chain = (

{"specifications": extraction_chain}

| prompt_transform

| llm

| StrOutputParser()

)

# --- Run the Chain ---

input_text = "The new laptop features a 3.5 GHz octa-core processor, 16GB RAM, and 1TB NVMe SSD."

final_result = full_chain.invoke({"text_input": input_text})

print("\n--- Final JSON Output ---")

print(final_result)

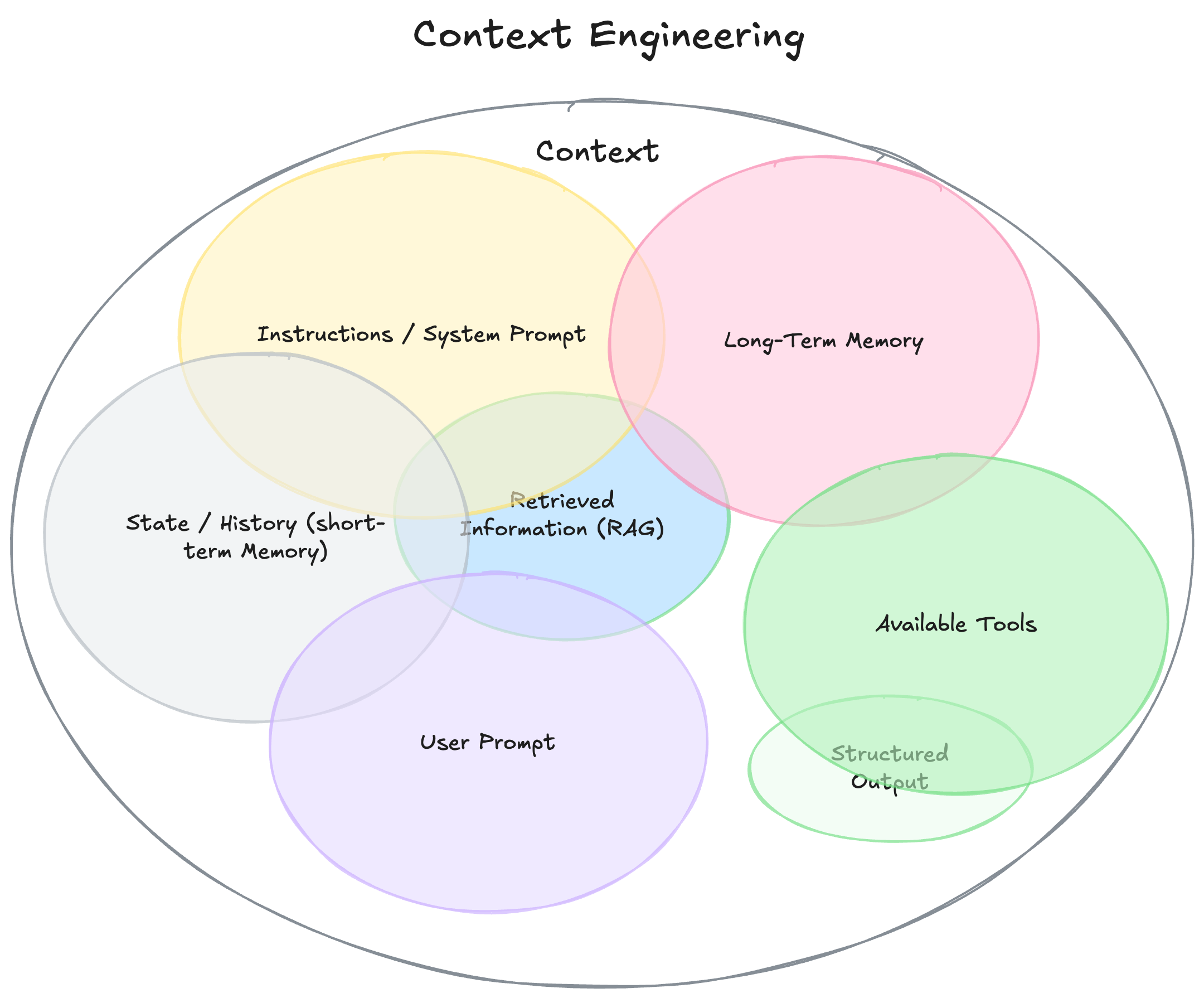

Context Engineering vs Prompt Engineering

- Prompt Engineering: Focus on phrasing a single user query.

- Context Engineering: Constructing a rich informational environment before generation:

- System prompts (role & tone).

- Retrieved documents.

- Tool outputs (e.g., API results).

- Implicit data (user identity, history, environment).

Fig.1 – Context Engineering builds an environment that maximizes model performance.

It reframes AI from “answering a question” to “acting in a well-structured environment.”

At a Glance

- What: Complex tasks overwhelm LLMs if handled in one prompt.

- Why: Prompt chaining improves reliability by breaking tasks into steps.

- Rule of Thumb: Use chaining when tasks are too complex, require external tools, or need multi-step reasoning/state.

Fig.2 – Prompt Chaining Pattern: each step’s output feeds the next step.

Key Takeaways

- Prompt Chaining = divide complex tasks into smaller steps.

- Improves reliability and manageability.

- Frameworks: LangChain, LangGraph, Crew AI, Google ADK.

- Foundational for agentic systems with planning, reasoning, and execution.

Conclusion

Prompt chaining provides a robust framework for guiding LLMs through multi-step tasks.

- Enhances reliability & control.

- Enables agentic behaviors like planning, tool use, and state management.

- Essential for building context-aware intelligent systems.